Table of Contents Introduction The Transformer is currently one of the most popular architectures for NLP. We can periodically hear news about new architectures and models based on transformers generating a lot of buzz and expectations in the community. Google Research and members from Google Brain initially proposed the Transformer...

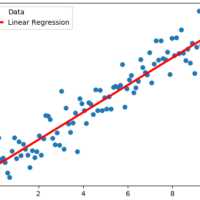

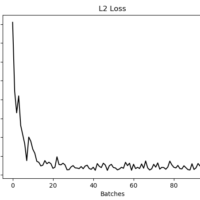

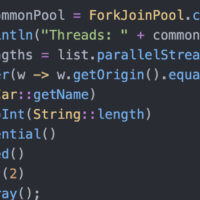

Continue reading...- ai asynchronous programming c# cholesky constraint propagation cqrs data engineering ddd depth first search design patterns dotnet grpc java javascript levenshtein lifestyle linear regression machine learning macosx matlab mediator ml profiling programming python redis sqlserver svm tensorflow transformers ubuntu vscode